A proposed class action suit claims that Apple is hiding behind claims of privacy in order to avoid stopping the storage of child sexual abuse material on iCloud, and alleged grooming over iMessage.

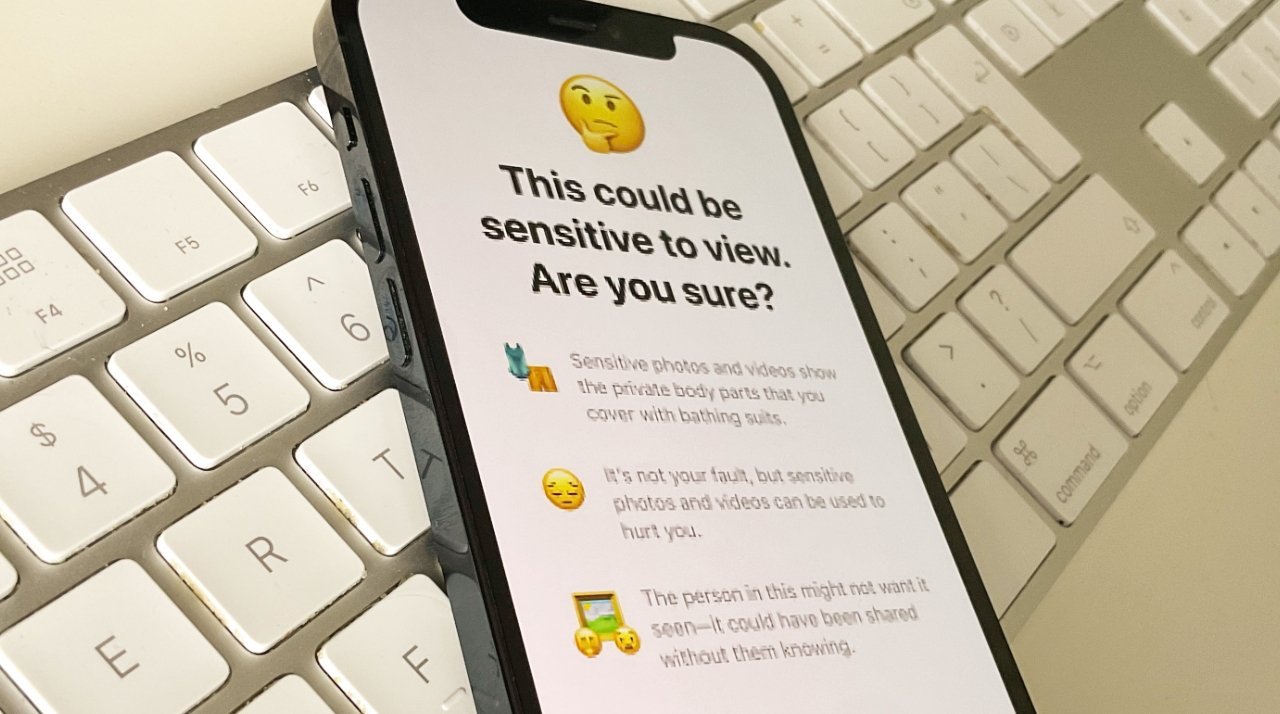

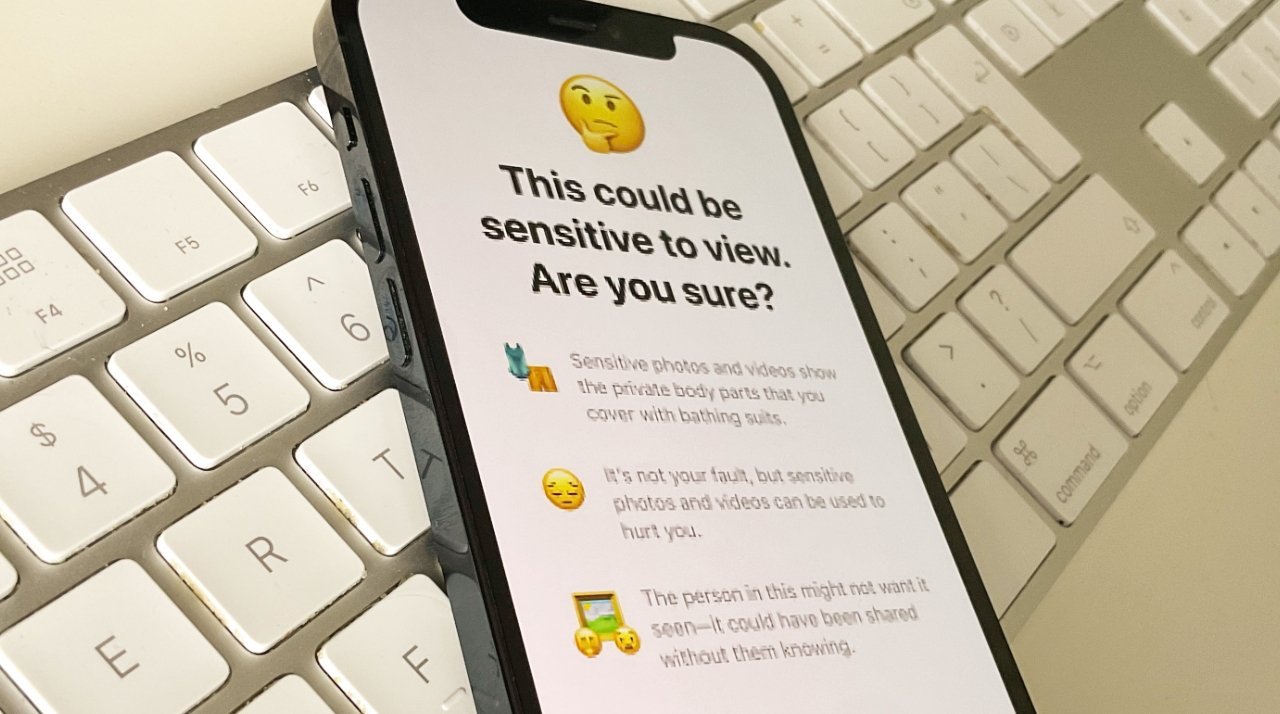

Apple cancelled its major CSAM proposals but introduced features such as automatic blocking of nudity sent to children

Apple cancelled its major CSAM proposals but introduced features such as automatic blocking of nudity sent to children

Following a UK organization’s claims that Apple is vastly underreporting incidents of child sexual abuse material (CSAM), a new proposed class action suit says the company is “privacy-washing” its responsibilities.

A new filing with the US District Court for the Northern District of California, has been brought on behalf of an unnamed 9-year-old plaintiff. Listed only as Jane Doe in the complaint, the filing says that she was coerced into making and uploading CSAM on her iPad.