When specifically tailored queries made to test Apple Intelligence using developer tools are intentionally ambiguous about race and gender, researchers have seen biases pop up.

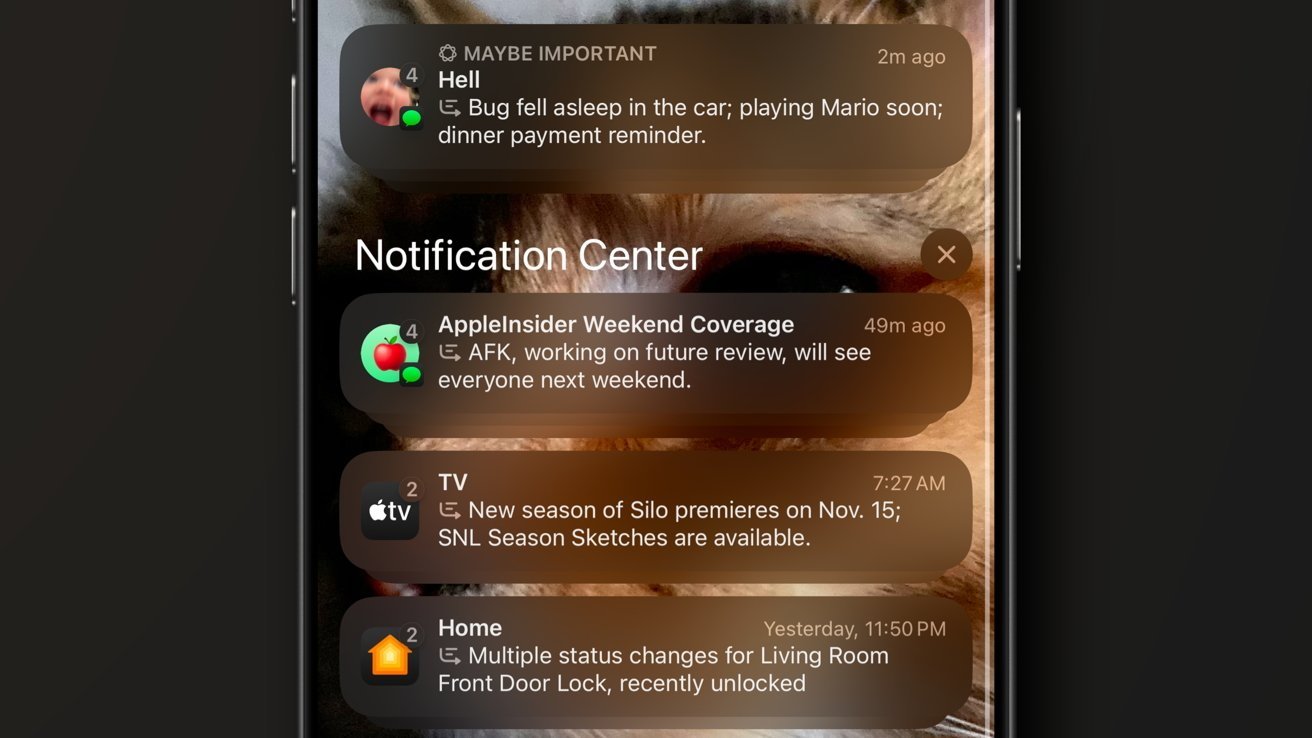

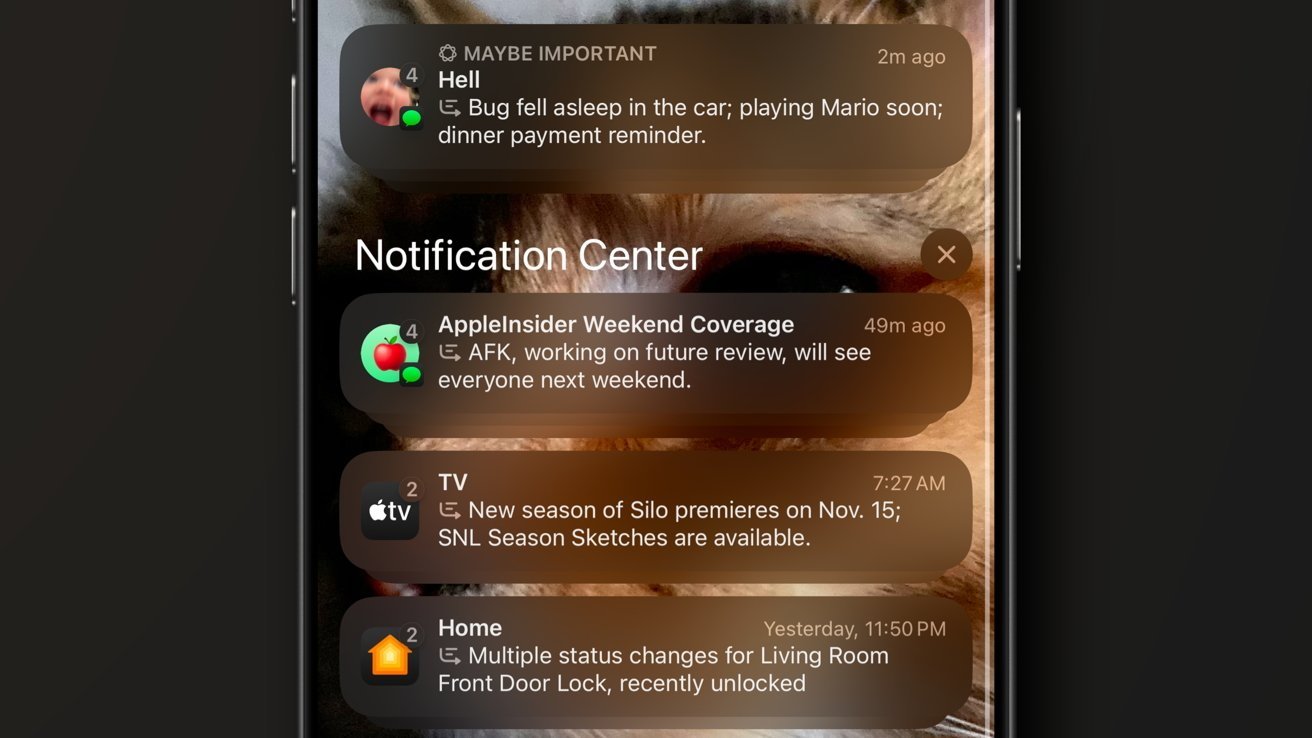

Examples of notification summaries on an iPhone

Examples of notification summaries on an iPhone

AI Forensics, a German nonprofit, analyzed over 10,000 notification summaries created by Apple’s AI feature. The report suggests that Apple Intelligence treats White people as the “default” while applying gender stereotypes when no gender has been specified.

According to the report, Apple Intelligence has a tendency to ignore a person’s ethnicity if they are caucasian. Conversely, any messages that mentioned another ethnicity regularly saw the notification summary follow suit.