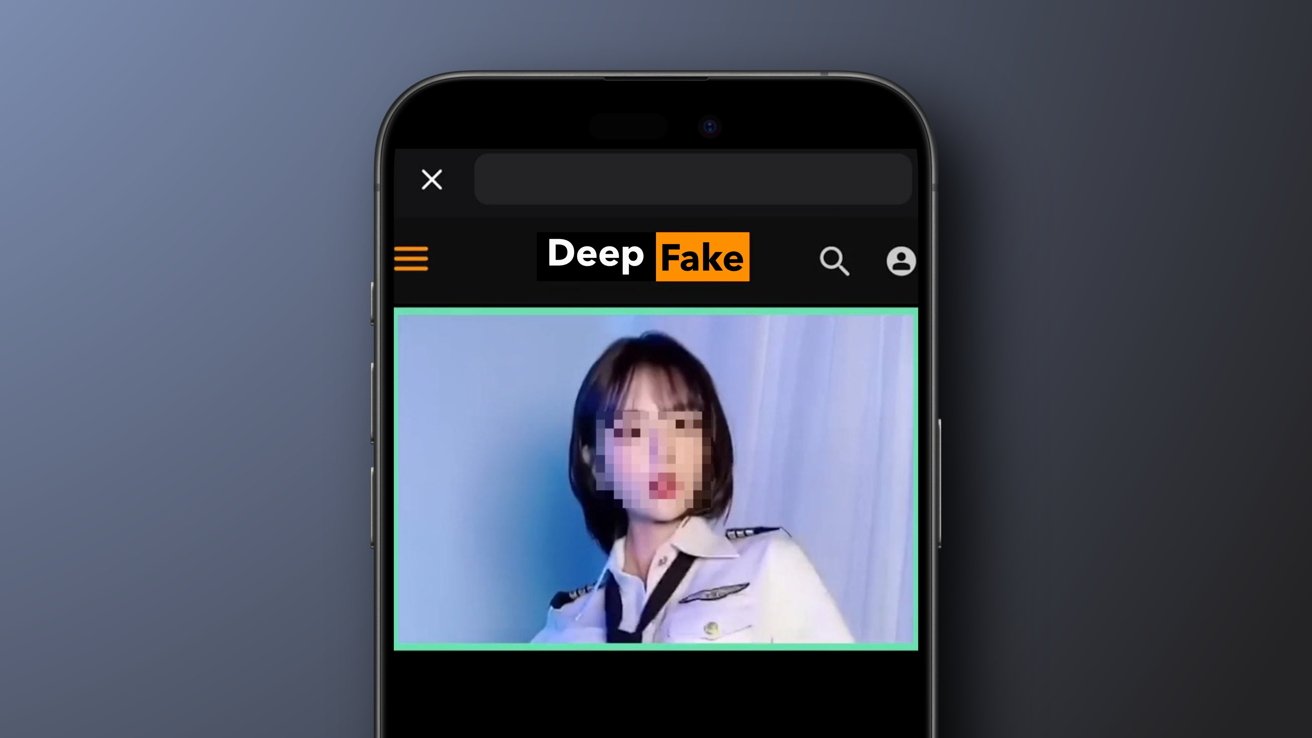

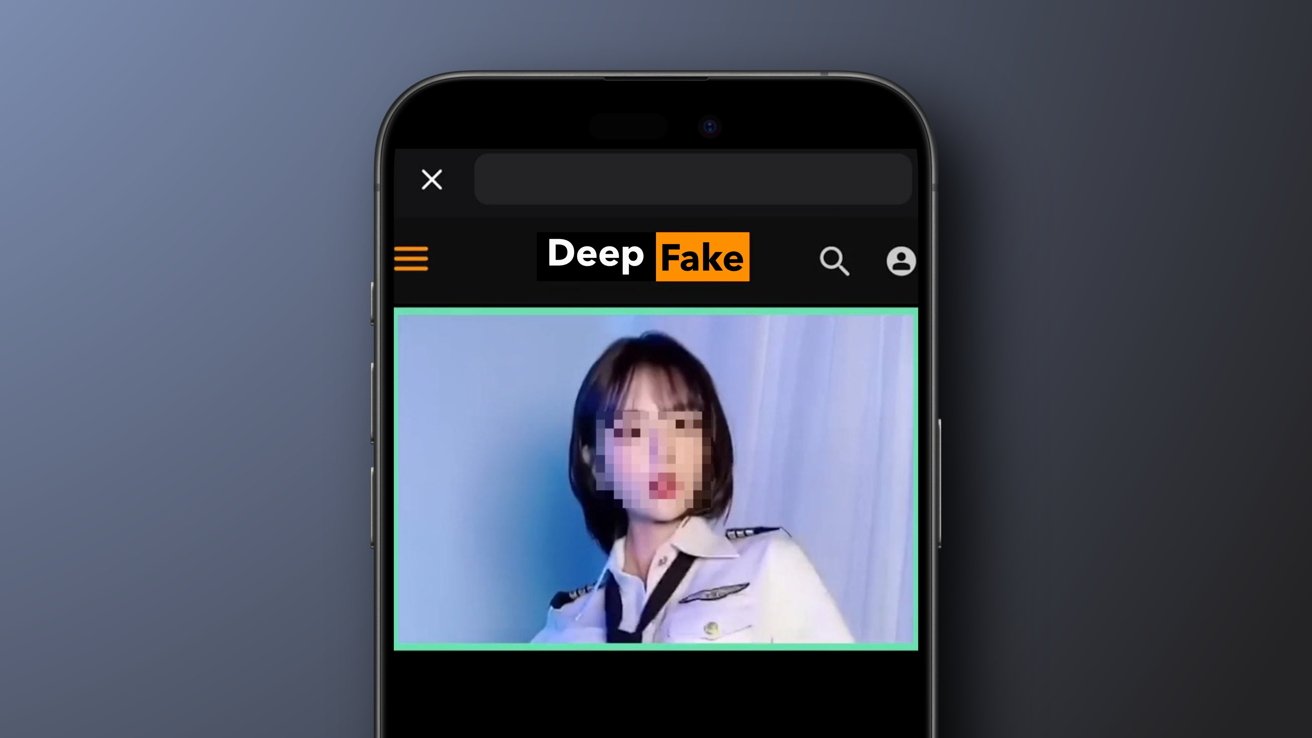

Pointed letters from Congress have been sent to tech executives like Apple’s Tim Cook, stating concerns over the prevalence of deepfake non-consensual intimate images.

Non-consensual intimate images being made with apps on iPhone

Non-consensual intimate images being made with apps on iPhone

The letters stem from earlier reports about nude deepfakes being created using dual-use apps. Advertisements popped up all over social media promoting face swapping, and users were using them to place faces into nude or pornographic images.

According to a new report from 404 Media, the US Congress is taking action based on these reports, asking tech companies how it plans to stop non-consensual intimate images from being generated on their platforms. Letters were sent to Apple, Alphabet, Microsoft, Meta, ByteDance, Snap, and X.